bring your own pen device

On or about March 8th of this year the Cooper Hewitt Smithsonian Design Museum pulled the Pen from the floor as part of a larger effort to prevent the spread of Covid-19. The Pen is an interactive stylus given to every visitor that allowed them to collect objects on display by scanning wall labels with embedded NFC tags.

March 8th was two days before the fifth anniversary of the Pen being officially deployed in the museum. By March, it already seemed clear that the Pens were set to be retired by the museum and I expect they will never grace the floors of the galleries again, regardless of whether we return to a world of communal touching.

At the time we were trying to turn the Pen in to a reality the ability to scan arbitrary NFC tags in iOS devices was a holy grail in museum circles, always just around the corner but never actually possible. It was always possible to read and write NFC tags in Android devices. We used an Android tablet to configure the NFC tags in the wall labels at the Cooper Hewitt. The absence of that functionality in Apple products and the uncertainty of when it might happen meant that the so-called bring your own device

argument, as an alternative to developing the Pen, was moot.

Sometime last year, four years after the Pen launched, Apple started shipping iOS devices with built-in NFC readers and public APIs for using them. Recently, I got my hands on one of those devices. I have a wall label from the Cooper Hewitt with an embedded NFC tag that we used for testing so I decided to see what it would be like to use an iPhone, instead of the Pen, for scanning tags and saving objects.

This is the result of about a day's work, stretched over the course of a week. It was a way to scratch a long-standing itch and also an attempt to think about how we preserve some of what the Pen made possible in a world where we are afraid to touch anything that doesn't already belong to us. It's also an interesting problem for a museum like SFO Museum which, by virtue of being in an airport, is not in a position to hand out bespoke electronic hardware to its visitors.

The application reads and parses the NFC tag in the wall label and then fetches the object title and image using the Cooper Hewitt oEmbed endpoint. The Oembed standard doesn't provide any means for a person to save or collect

an object but it was the easiest and fastest way to prove the basic functionality of a tag-scanning application without getting bogged down in a lot of Cooper Hewitt API specific details.

This is similar to the approach that we've taken developing a general-purpose web application for geotagging photos at SFO Museum, using oEmbed as a common means for retrieving collection information. If the museum sector is still looking for simple, cost-effective and sustainable approaches to implement cross-institutional publishing of their collections then providing oEmbed endpoints is a good place to start. I'll talk about this more later in the post.

Once that was done, and even though I knew it wouldn't work, I implemented all the necessary API and authentication details to save an object back to my Cooper Hewitt account.

At the same time that we launched the Pen we also enabled the ability for people to save objects to a shoebox

on the collection.cooperhewitt.org website. If you look at what the website is doing under the hood

it's easy to see that the site itself is calling a cooperhewitt.shoebox.items.collectItem API method. Only the Cooper Hewitt website can use this API method and that's a decision I made five years ago.

In retrospect that decision not to make the API method generally available to the public now seems, perversely, to mirror Apple's decision to limit access to the embedded NFC chips on its phones to a finite set of applications that they controlled.

Here are some of the other things I learned, or re-learned, in the process of developing the application:

- The Cooper Hewitt API does not bother with OAuth2 client secrets and only requires an OAuth2 client ID when an application authenticates a user.

- The Cooper Hewitt API passes back a

codeparameter rather than atokenparameter during the authentication flow. This appears to just be a question of convention and nomenclature and not a bug. I wrote this code but I don't remember what I was thinking at the time. - The Cooper Hewitt API does not require or return the

stateparameter during the authentication flow. This is not technically a bug but really ought to be treated like one. - The

you.cooperhewitt.orglogin endpoint does not forward all the query parameters on to the Cooper Hewitt website. The consequence of this is that the authentication flow will fail for people who aren't already logged in to the Cooper Hewitt website. This is definitely a bug. - As mentioned, the

cooperhewitt.shoebox.items.collectItemAPI method was never made available to the general public.

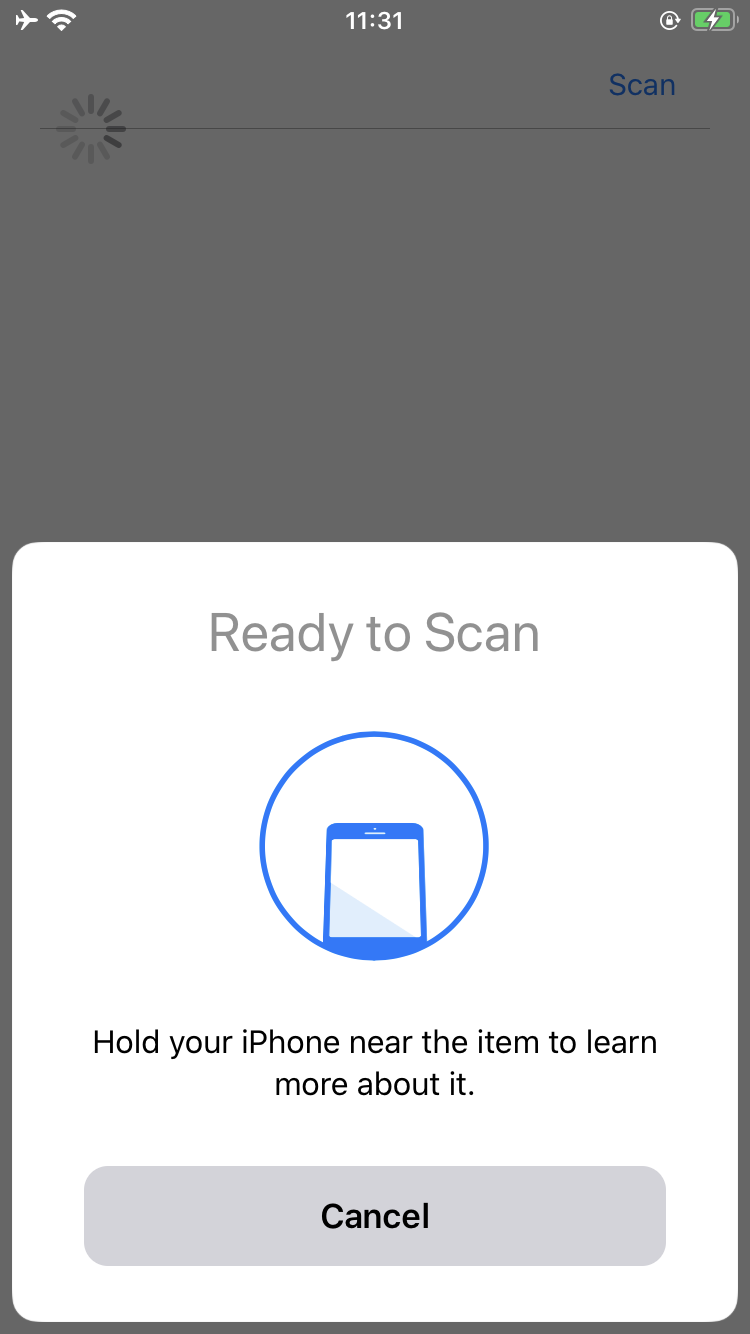

Reading NFC tags on a phone is still not obvious, though.

Apple provides some default dialog messages and interface elements to notify a person that reading NFC tags is possible but there is nothing to illustrate where that person is supposed to place their phone over an NFC tag or the strength of a tag's signal. I have attempted to address this by placing an activity indicator

in the application's user interface around the area around where reading tags works best but I doubt the NFC reader is in the same place on different devices. This is the sort of thing that would be useful to have baked in to the operating system.

It also highlights and re-enforces the work that Timo Arnall has done to describe how we make technologies like NFC visible to users.

Everything involving the Cooper Hewitt API is literally a 30 minutes to a couple hours

sized project to fix but it doesn't seem like the museum has much of an appetite for addressing these issues anymore. I forfeited my right to do anything about that when I left the museum so it is what it is.

Absent the ability to save objects back to my Cooper Hewitt account the application has the ability to also save things locally in a SQLite database. So far that's all it does. There is no interface for showing or editing saved objects yet. I would love some help with that.

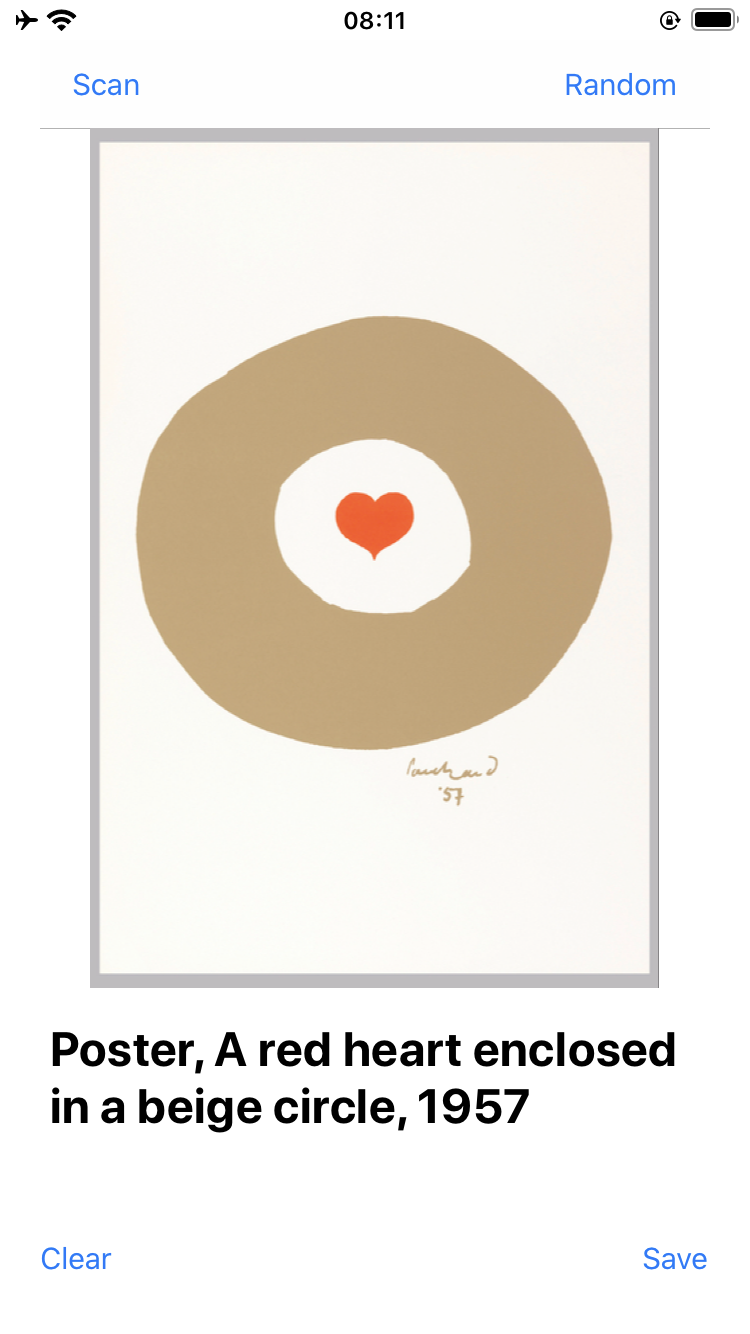

Having only one NFC-enabled wall label the application I started building was of limited use. Since I had eventually implemented all the necessary code to use the Cooper Hewitt API I realized I could also add a random

button which would call the

cooperhewitt.objects.getRandom API method and display any one of the 200,000 objects in the museum's collection. Which I can at least save to the local database on my phone. Maybe some day I will able to save them to my Cooper Hewitt account.

Assuming that Cooper Hewitt is going to retire the Pen there would seem to be little reason to continue producing and embedding NFC tags with object IDs in its wall labels. By the time the museum re-opens those wall labels might have been replaced too and even if I took my application in to the museum there wouldn't be anything to scan.

That would be a shame because the underlying motivation for the Pen was to enable visitors to remember their visit to the museum without needing to spend all their time working to remember their visit during their time at the museum. The promise of the Pen was that it afforded people the means to remember an object simply by touching its wall label. The role of the museum in this activity was not to dictate the terms of that remembering, only to make it possible.

Think about the number of people you see in museums taking pictures of objects or their wall labels. They are doing what the Pen was supposed to do but without any means to connect those photos back to the objects themselves, or the broader collection. If all those wall labels contained an NFC tag that broadcast an object's unique identifier then a museum will have taken meaningful steps towards making a shared remembering, a remembering that implicitly involves the museum, possible.

The phrase NFC tag

should be understood to mean any equivalent technology

. Everything I've described could be implemented using QR codes and camera-based applications. QR codes introduce their own aesthetic and design challenges given the limited space on museum wall labels but when weighed against the cost and complexity of deploying embedded NFC tags they might be a better choice for some museums.

I still believe that the Pen was, and remains, the best physical means to achieve that goal of remembering but there is no denying that something like the Pen demands an investment in time, staff, capital, and now health and safety, resources outside the means of many institutions.

Earlier in this post I wrote that If the museum sector is still looking for simple, cost-effective and sustainable approaches to implement cross-institutional publishing of their collections then providing oEmbed endpoints is a good place to start.

An oEmbed endpoint that was paired with stable identifiers embedded in wall labels would make it possible for any museum to offer its visitors the same agency to remember that the Pen did.

Providing an oEmbed endpoint

is not a zero-cost proposition but, setting aside the technical details for another post, I can say with confidence it's not very hard or expensive either. If a museum has ever put together a spreadsheet for ingesting their collection in to the Google Art Project, for example, they are about 75% of the way towards making it possible. I may build a tool to deal with the other 25% soon but anyone on any of the digital teams in the cultural heritage sector could do the same. Someone should build that tool and it should be made broadly available to the sector as a common good.

Providing an oEmbed endpoint

does not answer the question of how a visitor saves or remembers an object. oEmbed simply defines a standard way for an application to look up information for an object ID stored in an NFC tag, whether its an app on your phone or a bespoke physical device.

Essentially, it’s a lightweight API that doesn’t require authentication or credentials which, when given a URL, returns just enough information to display an image and suitable accreditation.

Importantly, for the cultural heritage sector, it is simple and straigtforward enough that it is realistic to expect that we could all implement it. Critically, we could implement it for the benefit of all of our visitors rather than any one institution and, with the passage of time, to the benefit of all our collections.

I am not very optimistic this will ever happen but I continue to believe it's a goal worth pursuing. To that end I've published the source code for the iOS NFC tag-scanning (and random object) application I've been working on:

github.com/aaronland/ios-wunderkammer

It should be considered an alpha-quality application. It lacks documentation and is still very much specific to the Cooper Hewitt. I will continue to improve it as time and circumstance permit, specifically with an eye towards making it work with any institution that follows a limited set of guidelines. I would love some help if anyone is interested.

I built this application because I wanted to prove that it is within the means of cultural heritage institutions to do what we did with the Pen, at Cooper Hewitt, sector-wide. I built it because it is the application that I want to have when I go to every museum.

This blog post is full of links.

#pen